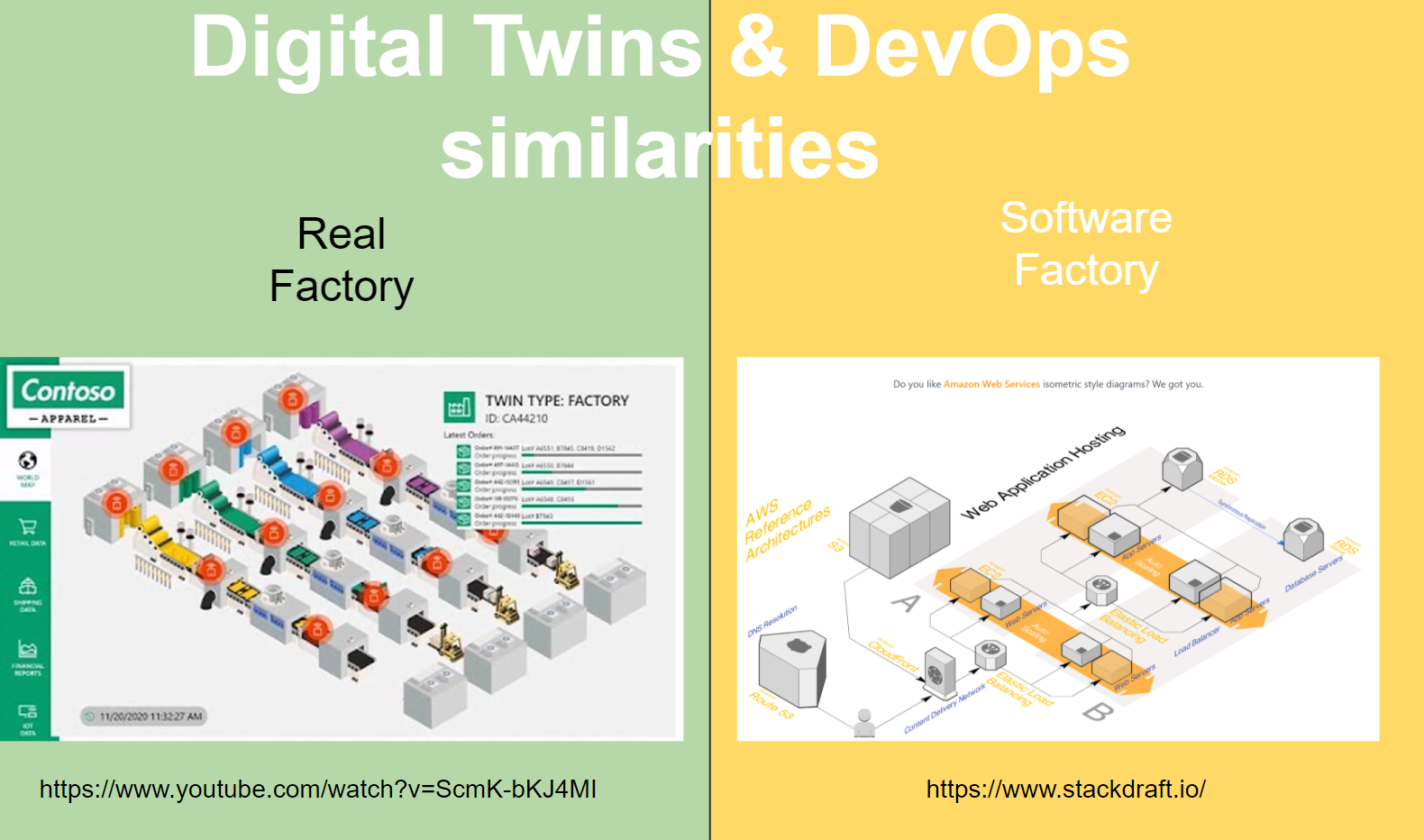

DevOps and Agile have always taken an interest in manufacturing: concepts such as Lean, pipeline, Kanban they all find their origins in the industrial world. Now, manufacturing is looking at the software world in the hope they can achieve the same agility. The concept of “Digital Twin” is to help them make a digital/virtual/software equivalent of the real world. In this talk I did a first pass at the similarities between the DevOps SDLC and “Digital Twins”.

Bonus: Watch Mark Burgess wonderfull Youtube series on Bigger , Faster and Smarter

This presentation is a first pass at looking at the similarities between Devops and Digital twins. It should not be seen as a conherent or a one two three step explainer for that matter. While these are unfinished thoughts , I hope it sparks new discussion and we can really think together. I don’t have yet enough too much concrete take aways. Yet, it made me rethink the bi-directional nature of the SDLC , the componentisation of software/container/infrastructure components in general and the lack of specs.

As always I’d love to hear your thoughts ! What did it make you think of? What story would you like to share? Please find a way to get back to me.

I would like to thank professor Benoit Combemale who invited me to share my ideas with the EDT Community. Checkout their Youtube channel on Engineering Digital Twins. In addition I want to thank professor Hans Van Gheluwe who reached out to me after being my thesis promotor in 1993! “After soo many years our early ideas around modeling now stand a chance to become reality thanks to DevOps” .

If you want to jump right in my talk here is the video, if you want a bit more context read on.

The concept of “Digital twins” is to create a virtual representation of the physical world allowing us to do things such as simulations , testing, integration. This concept has been used for representing a delivery pipeline in factories, simulating oil platform changes to complete cities. The cost of trying things out in the virtual world is much smaller and we can simulate changes before we release them on the real world.

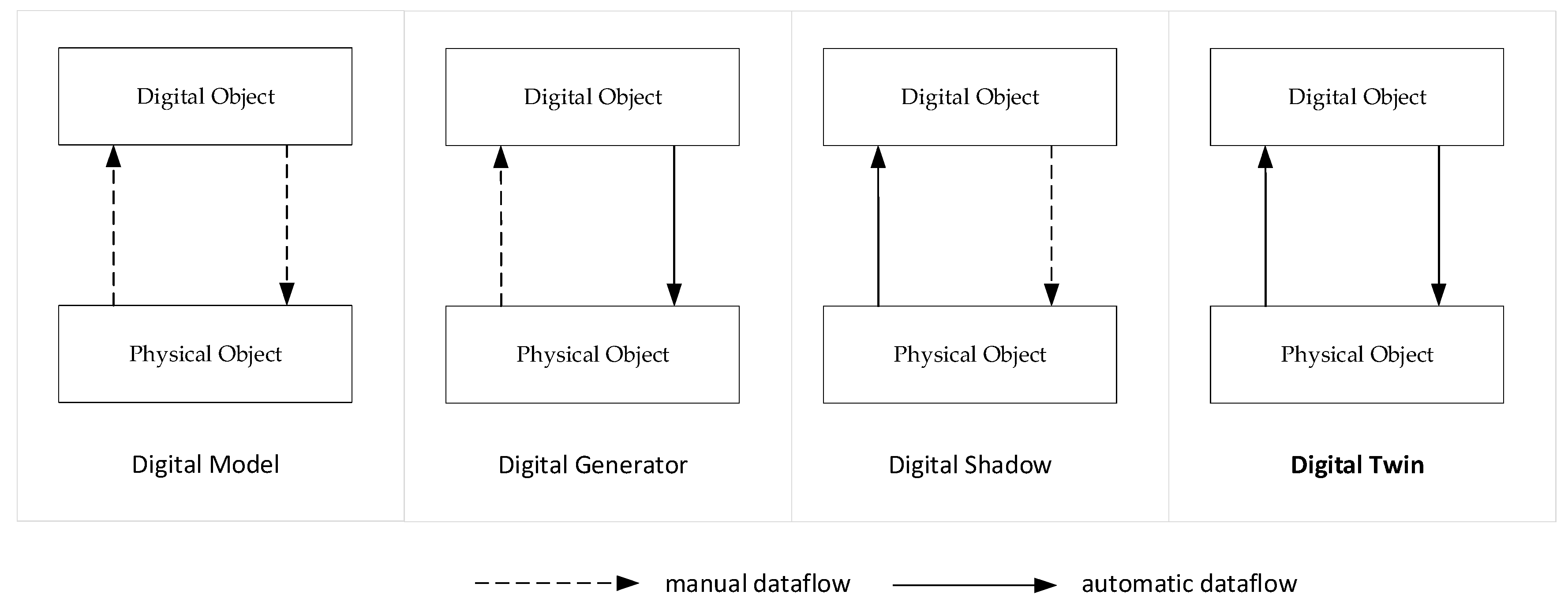

Strictly speaking a digital twin is more then just a model: by definition it creates a bi-directional feedback system. Changes in the real world affect the model and changes of the model impact the real world. Related concepts are MBSE Model-based systems engineering , MDE Model Driven engineering CPS (Cyber-Physical systems).

Source - Systems Architecture Design Pattern Catalog for Developing Digital Twins

Source - Systems Architecture Design Pattern Catalog for Developing Digital Twins

You can see there is a lot of emphasis around the model, something that we used to do in the early days of software development: no way things went to production without an UML diagram :) Now we have progressed and documentation has taken a bit of back seat: executable code with test serves as documentation these days. In recent years we have moved back a bit where we started to define things: using concepts “as code”, yaml, spec slowly are making their way back into the software developer. This time more integrated in the workflow instead of diagrams disconnected with reality. Therefore the talk was initially titled : “Will models make a comeback in the SDLC?”.

TwinOps has been promoted by Dr. Jerome Hugues from the Carnegie Mellon University in way to make the industrial side become similar to DevOps . His work has found application inside the Department of Defense combining both hardware and software design together. I personally wanted to come from the other side: “What can DevOps learn from industrial Digital Twins”. This might seem strange as we are dealing with virtual systems and not with physical systems. Yet, I believe we should tap into their vast experience with dealing with complex systems. This virtual/virtual aspect has also been called “Cyber/Cyber systems”: interestingly enough this is something that came up in a talk from Facebook how end to end tests don’t cut it any more and they have systems of virtual agents testing the production system with a set of rules resulting in a digital twin of Facebook . All the more cues, to explore some more in the future!

Transcript

[00:00:00] I like to promote these things and I like to think in analogies and, therefore I’m grateful that I’m invited into this community, to talk about digital twins and DevOps. It’s deliberately that the EDT community is bigger and they know more about digital twins than I will ever do.

I do bring my knowledge of DevOps and that’s how you have to look at this presentation. I’ve spent a couple of weeks looking at the literature, what I could find ,analogies, and this presentation is version one of this analogy of things that I found that might be useful and kind of cross-pollinate the two worlds.

The original title was will models make a comeback in the DevOps SDLC. Because the word model kept coming back. And when I started long time ago, models were all the rage. Everything had to be specified because it was expensive to put things into production. This is kind of the background why [00:01:00] this title came, because I think that eventually through DevOps, more and more models are starting to reappear again, final disclaimer, this is a thing together by the end, there’s going to be lots of room to give feedback ideas.

I’m just trying to, kind of pick your imagination if you see correlation or kind of any, thing that I missed or that you think is valuable, please share at the. So there’s two ways of looking at this. I’m looking at mostly from the world of dev ops because that’s the world that I know best, but I’m trying to imagine, and I’m trying to put this in the context of the world of a model based engineering.

A lot of the examples are things that I, we learned into devops world, and I think it can be related to models. When I first, you know, you do your research and I [00:02:00] do it through Google, that’s what I do. And I, I look up the word, DevOps and models and digital twins, and probably the first paper I came across was something around digital twins at twin ops and more DevOps.

And when I looked at the paper, there was a lot of. Kind of looking at similarities and there was the, there were envy, of the speed of DevOps and speed of changes, which is hard in a physical world. And which is ironically, because it was the same speed that I was envious from the agile software development world.

When I started out as an admin and. In part of, in the paper, what I try to do is they, they kind of try to distill the model and convert it eventually into code. And then through that translation, that code became [00:03:00] part of the SDLC. And for me, that sounded very similar to what has happened initially in the DevOps discussion around infrastructure, as code, we started the virtualization almost like we threw the DSL, whatever it was like, CFEngine puppet or chef.

There were all about specifying what we did manually. Before in scripts as a reusable model, the instance creation on AWS the speed of creation, the user creation on the cloud, everything was code, and that has just been expanding. The other day I made a tweet or everything that you could like think about as code.

I think I came up with about 50 different things that eventually turned to ask code, but most important infrastructure as code, policy as code, security as code the pipeline and the platform. So I think even if it’s not [00:04:00] actually code code, there is something of the DSL that made it as code as people saw it.

And more, expansion on the ideas of my reading was that I came across the terms, digital model, digital shadow, digital generator, and digital twin for those familiar, with the concepts of digit ones, probably not a new thing, but for me, when I saw the diagram from the feedback loops, I try to translate this into the three models, a continuous integration, continuous deployment and DevOps, and the both kind of like continuous integration.

One of my biggest, annoyances was that they stopped right before production. And then it was a manual thing. Eventually we moved from continuous integration, continuous delivery, continuous deployment, and I was all becoming, kind of automation and. I think dev [00:05:00] ops, what they try to do is beyond just the continuous deployment is also look at the monitoring and the feedback of the real world of the production world, and then bring that automatically into the developer world as well.

So I think there’s a lot of similarities and both worlds have been looking at similar things. There’s a real object or the physical object or the real world, the production world. And they’re trying to sing that with a model where another environment, but both are seeking fast feedback and some of it is manual and some of it is automated.

The other thing that immediately came clear is that every one around multidisciplinary, around digital twin talks about multidisciplinary teams, which was very similar to breaking the silos, in dev ops and that collaboration aspect with different views on the system, the different view of what is going on in the [00:06:00] real world and what is going on in the internal development or, or kind of model that is something that, was, historically breaking into two teams because they’re dealing with different problems.

But part of the solution of dev ops was breaking those teams or the barriers between those things and making them collaborate. When you talk about looking at those models, and you. Build these models. One thing that came up over and over again is that there’s some business goal and some business knowledge.

And sometimes just by looking at the components that not really clear. And in dev ops, we learned that we are having so many discussions about building the model that is inside people’s head and making that more defined, more knowledge, actually externalized. The most recent examples have been event storming where we try to like express [00:07:00] whatever we’re describing is software in business terms or the collaboration between people doing the, not just pair programming, but doing more programming.

But eventually it’s all about conversation and the shared model and the shared view of the world, but not just the mechanical technical view of the world, but the business view of the world as well. And most of the DevOps projects that I’ve seen, they, they kind of start in this state where everything was done in manual, a lot of meta data during the production process while creating servers, while creating infrastructures, that was all lost because it was not deemed important.

And one of the things we saw is that we can use. Data that’s out there, but we’re almost trying to reverse engineer the model from whatever is out there that was done manually. And that is typically one of the first [00:08:00] steps, in, in kind of, the dev ops projects is like, what’s out there. We don’t know.

Let’s try to discover and then kind of build a model of what’s out there. And then once we’re in a state that we know what’s out there, we can start thinking to do plan changes and work from there. And I, I don’t think that’s any different from when you have like a model that’s out there in the wild.

And you’re trying to rebuild this digitally. There is like a, a lost metadata and a manual actions you’re trying to reproduce somehow from the data you’re gathering from the real. And obviously the nice visualizations, where possible once you have all that information, but the visualization is just not the technical thing.

They’re also part of the conversation starters, people see if they see, like, why is this red? Why is this like this? Why does the flow go like that? So even though the regionalization is not just there, they have meaning. And ideally they [00:09:00] relate somehow to the business, value that you’re bringing with your digital twin or with your infrastructure.

And I mentioned in the beginning that, you know, when I started out, everything had to be modeled by UML and I don’t know where somehow this. It’s very hard to do to keep in sync. It was never exact, it was like the discussions kept on going and kept on going. And, and then agile kind of put some word the wording we’ve we favor working software over comprehensive documentation.

And I’m not saying this is the excuse to say, well, let’s just give documentation and, and, and build a product which has been kind of for many years, a little bit, the vibe in the community. I think the nice thing about building this model has turned out that. By building this model, it became [00:10:00] testable and executable.

The way documentation changed was that it was not just paper was not just a diagram, but you can execute on this. And there’s a real kind of revival happening, around it. Almost like diagrams as code where you model and you, you create the interactive model kind of from what you’re coding. And that, that is kind of a, again, a new leap in not just what you’re building, but also the knowledge that you’re exposing and sharing.

And once we had kind of the CICB pipeline. So we had that kind of reverse engineered the coding reverse to kind of the, the infrastructure. This created like a safety net. When we did changes, we could either bronze for, or rebuild the environment. So we could experiment on this and pretty much ended up [00:11:00] in almost like feature branches, like per extra thing you add, there’s a whole clone of the system.

Obviously not complete, or it doesn’t have to be complete, but enough to test what your feature is doing. And that’s quite similar when you have a digital twin, you’re trying to change something, a new feature, a new extra thing, a new change that you can simulate a true that as well. It gives you that confidence and the safety experimentation instead of doing it in the real world or on the real, production environment.

And this got later expanded to the idea or the notion of dark launches, where we take a subset or a sampling of production, we do the change and then we can see whether it’s successful. Yes or no. I think there’s a similarity in what we’re trying to achieve in both worlds in the safety of execution and experiments.

But we also learned that just by building versions and labeling all the versions. [00:12:00] It’s not enough. Does the concept of reproducible builds and it, it’s not enough to have an artifact. It’s not enough to know, all the versions of the artifact. The ID, around her reproducible builds is that you also record the two chain things have been made with.

And I think that’s also another part that is happening in digital twins is that whatever component, whatever part, part you’re bringing in there, you also want to know whether you can repute reproduce that and not just by looking at the source, but sometimes you need a whole tool chain to rebuild that source to make it a chain.

There’s definitely more work than just version. And then once we have all the information we have at version, we have the workflow kind of built, when we putting into them to production, there’s this annoying thing that is actually like, whatever we do, there’s going to be drift. There’s going to be drift between the [00:13:00] actual model in the real world or in production and the model we have in, in our test environment or in our digital twin.

And that is the specifications might be different. The inventory what’s out there might be different than knowledge might be different. A lot of it is drift is everywhere. And this continuously sinking, which is a digital twin kind of bi-directional updating, it’s quite important in that concept.

And even though people were automating the code, And deployment. It was important to keep checking whether something was different in production. Very similar what people would do in the it world, like what’s happening and what’s not happening. And that we kind of frequency it, the two truths from each other, but sometimes the reproducing of the environment was really hard.

And, when I, when I saw a talk around co-simulation and co modeling, it discussed about some of the models are black boxes and we don’t [00:14:00] know what is happening. And this reminded me of, a concept, or like a product to local stack where. When you have a Saas provider like Amazon or Google or, or another service that provides you, you don’t, you’re not able to fork or copy that environment.

What they provide you is almost like a local version or a slimmed down version, or a testable version in a black box way, that you can use to test. So containers and Docker and similar things have been instrumental to kind of create these local black box environments that people can test against without having to know all the details.

Again, a similarity between both, environment and, I think this one is. obvious open, open, open, it has helped progress the communities. It has helped the things. And the reason why that is, is, is about the sharing. Like we shared, the infrastructure as code recipes. [00:15:00] We, we shared, the monitoring dashboards.

We have like, all that sharing was instrumental to, improve both worlds. And eventually this even led to more and more the idea of interchangeable components. And I think the best example in the DevOps world is Kubernetes. Like while you could build a whole company this before to renew this existed with all the components, it became like a standard way of.

Cobbling like load balancers, servers, storage, and all these things together. So, so they kind of made this into an interchangeable model where you say, I have, for example, a load balancer or an ingress controller, and I can swap in and out different components depending on what I need or the specifications I need.

Is it just for testing? Is it for five users? Is it for a thousand users? Am I willing to spend a lot of money or less money? And this is a very similar to, I guess, in the component world where you need to understand the [00:16:00] specs, what of your components that use and the tolerances in which your component can operate, but the fact that you can interchange them and replace them at wool was a very important thing.

I think currently this is the manifestation of that in the, in the DevOps world and together with the. Kind of almost like components and reusable parts. There also became a way of looking at best practices of doing things. The catalog of is not just about, we have these components, we know how to build them, but we also have to look at the usage patterns.

And in this example, there’s like the dev ops typologies or the best practices for open source maintainers. There’s a lot of best practices that get, get fights in your model as well. And that’s, not just, the components or the technical, parts that you’re, assembling there. Eventually, [00:17:00] we also learned that, like we have the components, we know how it’s spelled, but we also using almost like an approval flow when, when we’re not exactly sure.

We’re we use, we kind of ask approval for, from another person to do the change. And because we have the safety environment, they can explore, they can test, they can see what the change will have impact for. And, and we kind of have this almost like the conversation, which is deploy, discuss, deploy, discuss, in a way that it gets to production.

And the nice thing is that, most people think about, oh, with infrastructure as good. I can see what the impact is. Am I going to create like a stack of five servers, but there’s additional benefits. Like once you have the model of this kind of whole infrastructure, you can do other aspects. Because for example, cost is a very important aspect of an infrastructure.

And when the person changes, the [00:18:00] model changes the code, they can immediately see the impact on other parameters. Like in this case with infra cost, they can see whenever they change something, what the impact is on that, infrastructure of the change. And I think what we learned is that it’s not just about.

Providing the model being able to reproduce this, but the self servicing aspect and the instant feedback was important. If, if a user has to wait for a neutral environment, has to wait for a digital twin to do their own experiments. There will not be that much, incentivized to do their change. And, and that’s kind of what you would be looking at is the self servicing.

And I often say that’s the different between traditional it, which was you could ask something and if they’re good and they’re going to be fast, but self-servicing, has been crucial, in the DevOps, change, competence. And I mentioned costs and [00:19:00] cost about doing the change, but once we have the model of what servers are running, what kind of infrastructure you’re running, how your Coover meters looks like we can do like more intelligent, intelligent things.

Like we can look at the capacity of your users, and your usages. So sometimes we can use spot instances, which is kind of leftover capacity. And in factories, that’s the same. You look at optimizing the cost, like what production line of am I sending things? And then I changed things. And you have, once you have that model, you can start using, the attributes or the properties to kind of do new things.

And what’s interesting is that, there’s, there’s the feedback coming back to, the model, because when, when you change, you say, I want to launch a cluster with 1500. And then your, Your reasoning or your capacity management, like spot.io will change this based on the demand. Your model is not any more 15, but the real [00:20:00] world has changed maybe 2 25 and they’re running on, on a different set of servers.

And this feedback, initially was hard for the inventors systems to keep up with, can you keep the model in sync with the changes that, your, intelligence is kind of doing. And of course, we talked about the models of the servers. And you would think like, oh, we’re, I’m going to spin up five servers here.

I have 10,000 users or something like that. But Netflix learn for example, that muddles lie and there was a practice about, they would spin up a new server on AWS that would benchmark it. And if the benchmark is okay only then they would use it. So it’s interesting because the model would say, just use five servers or one server, but they would actually verify that this in production and that would get the feedback, from, only by running it in [00:21:00] production and in the real world.

And because we now have that feedback system. I mean, we know what is the traditional normal kind of behavior. We could start looking at the usage and for costing, like the whole winter is confidence. Bonds will tell you like, is this normal usage? And it’s a fairly simple usage of forecasting, but it, it kind of like, allows you to do a preventive or create more capacity because we know there’s going to be usually a bigger load after 8:00 PM or something like that.

So this really helps, but also it’s helps you to look at your outlier. So you, with the monitoring system, you know, what, what is normal, what’s not normal. And if it’s not normal, you can be alerted or you can even act on this automatically. there as well. And we have the model of all the servers. We have the components we’re building it, we’re deploying it.

But [00:22:00] there is for example, a lot of the digital twins are used for testing, or test for failures. What if scenarios and cares engineering introduced that into DevOps, where we will randomly terminates instances in production, but it’s not really randomly the random, is still using the model.

By saying, for example, there have to be always five systems running, or I can only do this when I have like a load of, 50% of my users on that server. So. Even though the full tolerance is the test for failures uses the model in chaos engineering from what’s in production. And what is in the model that you have a defined.

And even, and that’s maybe a little bit of a sidestep, when things fail or when we need to upgrade the whole world, there’s still a lot of communication that needs to be happening. And, in [00:23:00] games they have a concept called live ops. And it’s usually a team that helps with these bigger changes, and communications.

But when it’s the small change, they are kind of like the release managers that do the discussions and help the users change things. And in a digital twin, when there’s a bigger update, there’s a lot of communication happening, uh, to whoever is using the digital twins. And it’s kind of like, I think it’s similar to live ops where this kind of release management, or managing the big world change, is something that needs to still be bombed by humans.

From chaos engineering, we kind of moved into resilience engineering because now that we are able to simulate failure to, to simulate the environment for the environment to reuse the model, we can do resilience engineering and, and the biggest aspect for resilience engineering is [00:24:00] that we are learning from failure.

And we use that for training and I love the digital twins are actually used, not just for predicting what’s going to happen in production, but also predicting how humans have to deal with a certain problems or how they will learn from failure because they can replace in our areas and see if that has been solved by certain thing.

So you see the two worlds kind of, almost coming together. What are. This comes you, resilience engineering comes from airplanes crashing and looking at root causes or like, different things and aspects and learning to prevent this and also learning how people would have to respond in certain scenarios.

And this is where digital twin can really help almost like in a similar simulator, and see how we can improve ourselves, to react on these failures. And then as, maybe as a, an almost as a next step, when [00:25:00] DevSecOps got introduced, we started to see that it was not just the containers that needed to be deployed or the infrastructures, but the application itself, we started to look at.

The whole bill of material of the application, because when you want to know whether your application is secure, what you do is you look at all the components and, and there has been the analogy of the software bill of materials in a way that we know what everything is composed of and without all the previous work of inventory, or looking, versioning, this would have been really hard, but within DevSecOps to see something secure, we can actually look at all the components, quite nicely.

And w w maybe what’s ironic is that, you know, are there certain time with the containers? Microservices, became the new rage and [00:26:00] all peoples are creating everything as an API. So it started out as code, and then it was like, everything needs to be a service. But we, we have the same problem. people had to first build the API APIs, they all did it manually. and then all of a sudden we said, well, what if we just create a specification or a model from the application and the API, then all of a sudden we have executable documentation. We can see who uses what we can see, what like input and output parameters go.

And then we saw the same movement happening is that we start to have an inventory of all the API services running in an environment, in an enterprise. We start translating that to model. We have the model that can create the tests and then we move from there. So even though we would have learned this by, looking at DevOps, we had to relearn this, for an [00:27:00] API and, I’m pretty sure in digital twins.

You know, we, we, all of a sudden we see, oh, why did we do this part again, all manual. And why did we not have a specification? I mean, I think part of this is that there’s, there’s a discovery process. Like you’re learning the problem. And then once you better understand the problem, you start to be able to document and to model it.

And I think we’re always going to hit that kind of problem, in the both worlds where we’re not sure what the model is, we’re just experimenting them from the experiments we learn what the model is. And then we can kind of more like do this more structurally. And I’m already mentioned in DevSecOps, looking at all the components, of the environment, of, of an application and then to see whether that’s secure.

And I, came across this blog post about those primitives and complex parts or elements, in digital twins and What the paper tries to explain is that [00:28:00] a primitive and a complex, they might have different tractors. And for example, in supply chain security, imagine you have all the components of the software.

You have all the build chain, everything that has been made. it’s really hard to say, what are the whole environment is secure? Yes or no. If we just look at all the components in there secure, yes. Probably the whole environment is secure, but if one of it is not, we can’t really predict, whether it’s still secure.

Yes or no, because the whole environment might have different characteristics. And what’s interesting is that it’s not just the components we own. It’s also, the components that third parties, whether that’s a soft service over an open source component, how they are built, how, how they’re are secured, that are important to, to decide whether something is secure yes or no.

So the main important part is that the behavior of the [00:29:00] complex system is different than the, the different parts of the primitives and the model. And it’s again, emerging behavior, that we’re looking at. The nice thing is that the more and more we define of that chain, the more we can start the simulating on that part.

And then maybe one of the final stretches is that if you know, I work at snake and we do a scanning of code for vulnerabilities, whether that’s your container or your code. And we tried to think whether you as an organization are secure. And what we learned is that while just looking at your attack, that is not enough.

We need to know how the tech is used in your organization. It’s almost like we w we wanna understand how a customer, , uses this library uses this application. and part of it is almost like [00:30:00] we said, reverse engineer, the technical parts, but we also have to reverse engineer the organizational flow and the behavior of the people there.

I think this is something that, If we want to make decisions on behalf of the customer, whether they should block a certain vulnerability yes or no, whether they should fix something. Yes or no. We need to have more, more in a broader view and it’s just not going to be solved by technology only. We need to almost have a virtual twin of their organization.

And I think almost like in sales and marketing organizations, you see this, that they, they kind of track all the movements of the customer. And I think if you want to have this more consolidation, of the technology changes and the organizational twin, if you want to call it like that, that both blend together.

And I think that is manifested a lot in the digital twin. When I talk about [00:31:00] the smart ecosystem, the, the world, where things live in is that there’s also a human side of the model that you have to reflect on, and to see what happens. So where, before my examples were more about like, if I change this technical component, what will be the Yampa impact, maybe now we can, you know, have the pipe dream about if I change the product.

You know, what will happen to the customer? Will they, they still want to be working on this. Can we predict this behavior? So it started to be almost like analytical mixture of analytical and operational environment that we’re trying to look at to, look at, to give better recommendations for the service that we’re providing.

I think one of the things that bogged me a little bit is what are, when I looked at dev ops and modeling in dev ops, everything was kind of [00:32:00] already digital. So I don’t know what are in the definition of digital twin. We can really say physical objects in the digital world, but maybe you have to think about it as the digital native twin where the digital twin is.

The digital twin of the production environment or a test environment. I’d love to hear from you how you think about this problems. I probably don’t know her love of the domain language in digital twins to, to express this wall. But I think there’s something there that both worlds are kind of like fusing together.

And, I think that’s how I would like to end in this presentation is I shown you a lot of the similarities. I do not have enough expertise in the digital twin world itself on the, on, in the industry. But I love to hear your examples on what resonated, what do you see is different, between the digital twin or the aspects that you heard about, modeling [00:33:00] in dev ops?

One of the things that like I am thinking about is that like the sustain is managing model sustainable still in dev ops it’s it’s. So ephemeral lightweight is changes, like who wants to model this for longer, longer period. Maybe that is for kind of the SaaS providers who will live and longer kind of like, industrial components where they are heading.

So maybe that is, a good, extension and the reason why this is going to happen. And the other part is I love to hear how in the, kind of the digital twin you world in the industry, how enough incentives were created for vendors, because they like to shield things, sauce, shield their services. So why would they expose this information?

Why would they kind of provide this to you? Is this because of a play off, if there’s enough competition, this works. If there’s not a [00:34:00] doesn’t. So I’d love to hear from you. You can reach me probably easiest is through Twitter at Patrick Debois. Or we can now have a discussion, or you can find me through the organizes, to give some feedback.